Some posts only go to subscribers via email. EXCLUSIVELY.

You can read more here or simply subscribe:

Is Facebook Going After AI Images?

Meta just announced that they’re about to start automatically labeling AI images. You can read their full announcement here. The following is my understanding and takeaways, and clearly not meant as advice, legal or otherwise.

How Facebook is going to distinguish AI images from “real” photos

They can’t do this using an algorithm. This shouldn’t come as a huge surprise to anyone. Midjourney and other image creation tools are so good these days, even I’m having a hard time telling a stock photo apart from an AI image.

So, they’re going to rely on invisible “stamps” in the form of file metadata and watermarks (hidden or visible).

We’re building industry-leading tools that can identify invisible markers at scale – specifically, the “AI generated” information in the C2PA and IPTC technical standards – so we can label images from Google, OpenAI, Microsoft, Adobe, Midjourney, and Shutterstock as they implement their plans for adding metadata to images created by their tools.

I’m impressed that they managed to get such a level of cooperation. I’m not seeing Stable Diffusion or Leonardo here, but all the other main players are there.

And yes, people could potentially remove the metadata and invisible watermarks. Meta is working on ways to counter that by creating invisible watermarks that would be harder to remove. We’ll see how that goes.

What does that mean for publishers?

Facebook currently accounts for a large percentage of my traffic. We sometimes use AI images in Facebook posts, just like we sometimes use them in our sites.

We don’t always use AI images, though. I don’t think AI images are always the right solution, and we still have two active Shutterstock accounts with a thousand downloads a month in each.

Still, we occasionally use AI images in Facebook posts.

I’m certainly curious about Facebook’s new labeling system and its effect on users. It wouldn’t surprise me if Pinterest would be next to adopt such a system, considering they are probably flooded by AI images these days.

I think this is the important bit in Meta’s statement –

If we determine that digitally created or altered image, video or audio content creates a particularly high risk of materially deceiving the public on a matter of importance, we may add a more prominent label if appropriate, so people have more information and context.

To me, three things stand out here.

1. This isn’t just about AI images

The statement applies to any “digitally created or altered” image. In other words, if you post a fake picture of a dog in a trash can to get attention from your Facebook followers, it doesn’t really matter if you used AI to create it, or just good ole Photoshop.

I like that. I think it’s smart. Sure, generating this using AI is only too easy, whereas doing something similar with Photoshop would take hours. But the principle is the same, and the photo could be construed as misleading regardless of how it was created. What matters is how you use it.

2. The context matters

The statement specifically addresses content that is “particularly high risk of materially deceiving the public on a matter of importance.” To me, this reads as saying that not all AI images are the same.

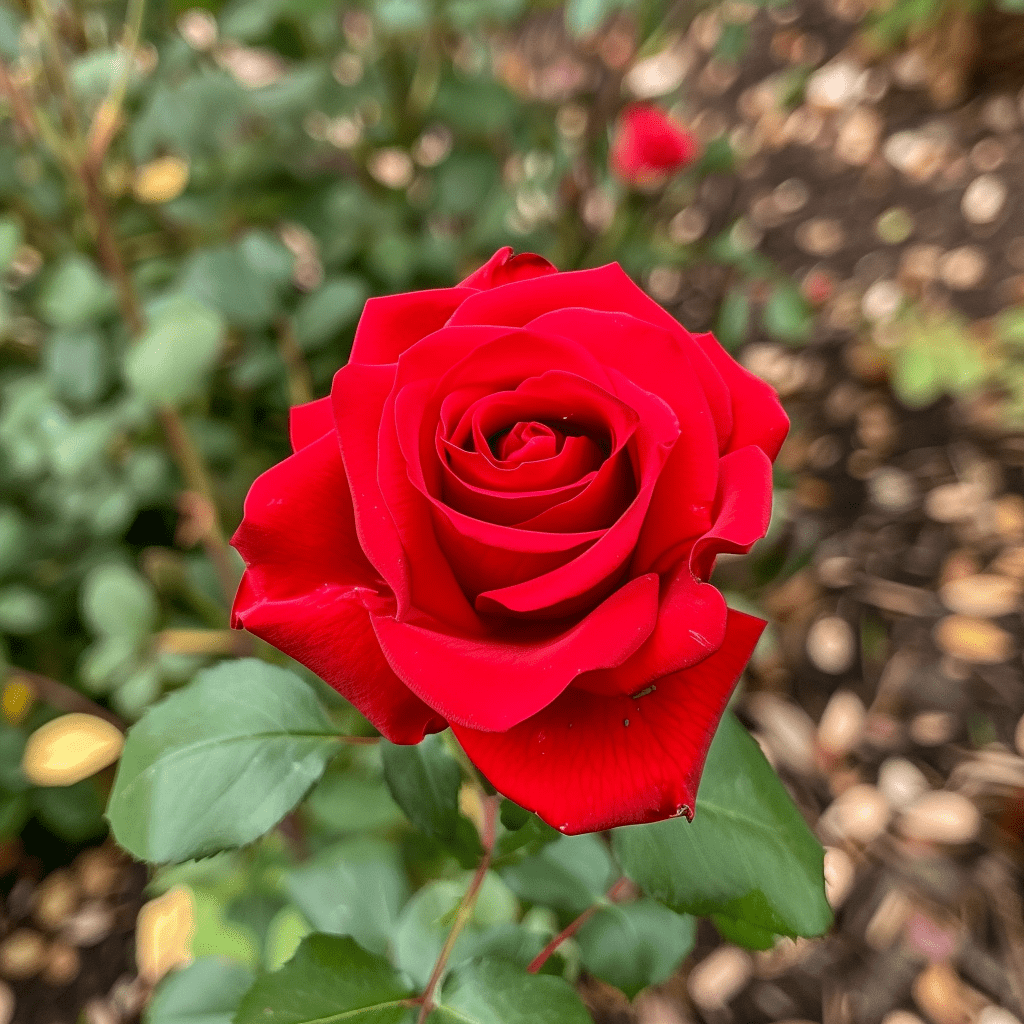

For example, I doubt any Facebook user would mind this image having been generated by AI.

Sure, professional photographers might mind it. That’s because Midjourney has been trained on their content and now replaces their services. But your average Facebook user isn’t being materially deceived on a matter of importance.

A fake image showing Biden and Trump sharing a ride to the white house would probably be more problematic.

Of course, this definition is that it leaves room for interpretation.

Who decides what creates a particularly high risk of materially deceiving the public? Or what is actually a matter of importance? I can only assume Meta will be the judge.

3. It doesn’t sound like they’re going to be too strict

Again, this is just my interpretation. But they’re saying that even in those high-risk cases, they

“may add a more prominent label if appropriate, so people have more information and context.”

Remember, this is for cases where they can’t actually verify if this is AI content or not. So, to me, it sounds like they can’t be absolutely sure, so they may add a “possibly AI image” tag?

It’s interesting to see that they don’t think AI content is necessarily “bad.” And I tend to agree with that approach. AI image generation has the potential to democratize art. I’m a terrible painter, but I can use AI to come up with the most beautiful images, literally just by imagining them.

Sure, right now, most people don’t know how to use those tools, but that’s temporary. Eventually, using AI to write or create art could become a common form of expression. And freedom of expression is important.

Or, if you want to take a more cynical view, Meta knows that these images drive engagement and keep users on Facebook, ultimately making Meta more money.

My overall take

Meta’s latest announcement reads to me as a balanced approach. It’s not like they’re dead against AI images, only waiting for a valid tool to find them and banish them from social media. They’re just looking to create more transparency in order to prevent abuse.

As a member of the public, I’m happy to see Meta is serious about this. I think it’s high time that we figure out how to handle AI images.

I’m going to continue using AI images on social media, and on our websites. Using them judicially, where appropriate, and for what I consider to be valid use cases. I’m not too worried by Meta’s new approach at this point. If anything, it’s making me a tad more comfortable using these images on their platform.

I do wonder what the AI content tags would look like and how they’re going to affect user behavior. Will people avoid AI content, scrolling past them faster? Or maybe it will pick their curiosity more? I guess time will tell.

As always, I’d love to hear from you! Are you using AI images on your sites or social media platforms? How do you think these changes will effect traffic from these channels? Leave a comment and we can keep discussing this here.