Some posts only go to subscribers via email. EXCLUSIVELY.

You can read more here or simply subscribe:

Site down! How a prolonged server outage affected our site

One of our largest websites was down for four days earlier this month. Here's what happened.

Life’s never dull when you’re a website publisher. A while ago I posted about the inherent risks that we have in this business and mentioned that I had firsthand experience with most of them.

Well, I have a new box I can now cross out on the “what can go wrong with a site” bingo card:

A fire brought down the server of one of our main sites.

Yup, that can happen too.

Today I want to share that story. What happened, how we dealt with it, how much money we lost, and how the prolonged downtime affected our Google rankings. I’ll also cover my dilemma about preventive measures we could take to prevent this from happening again.

Table of Contents

- Site down!

- So what happened to our google traffic

- How much money did we lose

- How to prevent this from happening again

- Lessons Learned

The site in question is a large forums site with around 1.3 monthly pageviews and a vibrant community of more than 150,000 members.

The site has been hosted with WebNX for several years now, on its own dedicated server. Large forums require a dedicated server to make sure server resources are properly balanced. We were happy with WebNX, as they offered a competitive price with good support and great uptime stats. I don’t think we’ve had any server-related downtime in the five years we’ve been with them.

Managing a dedicated server is not something I could ever do on my own. We have a server management service with AdminGeekz.com. I’ve known the owner, Scott, for many years now, and he’s a total server wizard and specializes in forum server optimization.

Site down!

When an active forums site goes down, people let you know.

On April 4th, I woke up to a multitude of emails and text messages from anxious moderators and site members letting me know they can’t access the site.

My awesome server admin was already on the case, but the news wasn’t good. This is all he could say at the time –

The site is offline at the minute as WebNX is having an outage in Utah. Waiting on their updates, the last one was –

“We are aware of a major issue in our Ogden datacenter and should have more info soon.”

AdminGeekz had several hundreds of clients with WebNX servers, so this was a major issue for the company. They were on the phone with the WebNX people and had constant updates.

Which was lot more than WebNX offered clients directly. We never got an email from them about the issue. Their site was up (they have multiple datacenters) but their helpdesk was down. Their Facebook page offered limited updates.

In fact, their first real update and explanation only came the following day –

At that point, the site had been offline for more than a day. With no clear ETA as to when we could expect it to be back online. Was our machine ok or was it one of the units that suffered water damage? We had no idea.

Scott was also at a loss. All we knew was that the poor people in Ogden were doing their best on a holiday weekend.

The following day, things didn’t seem much better:

Our site was still down. We had no idea whether our specific server – an actual physical computer out there in Ogden, Utah – was damaged. We couldn’t tell how long it would take to bring it back online either.

Assessing the options

So, what were our options?

We did have good backups of the site. I’ve learned that lesson many years ago when the very same site crashed under a massive DDoS attack. Back then, the most recent backups we could use were six months old. We lost six months worth of site data because the more recent backups were corrupt.

Ever since then, we pay for a solid backup service for this site. It costs around $150 a month, but it’s worth it.

One option was to get a new dedicated server somewhere else and build the site there using the backup. Rebuilding a huge forum from a backup takes time and effort, but can be done within a few hours if needs be.

The other option was to keep waiting and hope that our server didn’t have water damage. In which case, this was a simple matter of flipping the switch over at Ogden and getting the site back and running within minutes.

We opted to wait for one more day.

Keeping our visitors informed

A forums site is more than just a website on a server. It’s also an actual community of people who are used to meeting each other online, some on a daily basis. We had to reassure them that we’re still around.

We did two things –

- AdminGeekz redirected the domain to a page on one of their servers with an announcement that explained the site was down and we were working on getting it back online as soon as possible.

- We posted updates on our Facebook page about the situation.

Of course, I also communicated with our moderators via email the whole time, letting them know what was going on.

The Nail Biting Part

One more day went by and still no word from WebNX. People on their Facebook page reported that their servers were coming back to life. Ours wasn’t. This was the third day of the site being offline.

I was worried not just about the direct loss of revenue, but also with the potential effect this might have on our Google rankings. The site has tens of thousands of pages ranking on Google for tons of longtail queries. How long would Google let us keep the top rankings if people and web crawlers can’t access the information in the pages?

That’s why on the third day, we decided enough was enough. We had to bring the site back online on a new server.

Switching to a new server

Once the decision was made, things went rather fast. By the morning of the fourth day, Scott sent me a link to a machine that would meet our needs. With a dedicated server, you need to verify your identity by uploading pictures of your passport and credit card and have an actual person verify them. Once we got past that hurdle, our new server was set up within the hour. AdminGeekz then began the arduous process of resurrecting the site from the backups and three hours later we were back online.

And there was much rejoicing, as members found their way back to the site and started a celebration thread where they shared how difficult it was to be away and how much they missed everyone.

Moving to a new hosting solution proved to be the right decision. As it happened, our server was one of those that had been damaged. A week later, WebNX still had 30% of the servers out of order. Had we stayed with them, the downtime would have been much worse.

So what happened to our google traffic

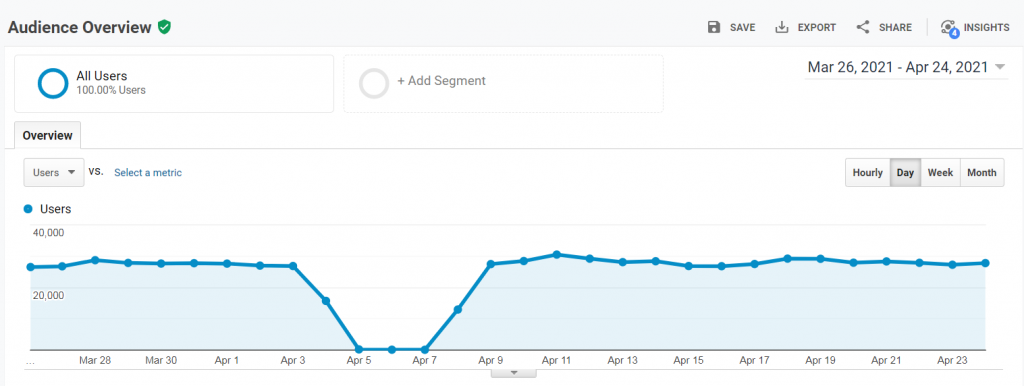

I’m happy to report that traffic bounced back right away. This is what the Google Analytics chart looks like –

The server went down sometime during April 3 and was brought back on June 8. Three full days and two half days with no traffic. No discernible affect on our traffic following the event.

How much money did we lose

This is an interesting question because we were right in the process of switching ad networks. The site had just been accepted into Adthrive that very week and we were supposed to start tweaking it on April 5. The move was delayed by five days because of that.

The site makes around $500 a day with Adthrive, so I estimate that we lost around $2,000 in revenue.

Did we get Compensated?

When the data center went down, angry customers cried foul all over their Facebook page. After all, our server was just one out of several thousands affected servers. We’re not talking just direct clients either. Entire hosting companies were renting machines from WebNX, providing customers with shared hosting from their dedicated WebNX servers.

If I had to guess, an outage like that could cost tens of thousands of dollars per machine per day in lost advertising revenue. Just think about it. Where niche sites are considered, a server should be able to easily serve 5-10 million pageviews a month. Even at $10 RPM, we’re talking $50-$100K in revenue each month.

That’s before you even begin to factor in additional losses. All of those small hosting companies that must have lost their clients and will possibly be held responsible for their clients’ losses.

With thousands of servers, we’re talking about potential damages of tens of millions of dollars.

That is, if WebNX were held accountable.

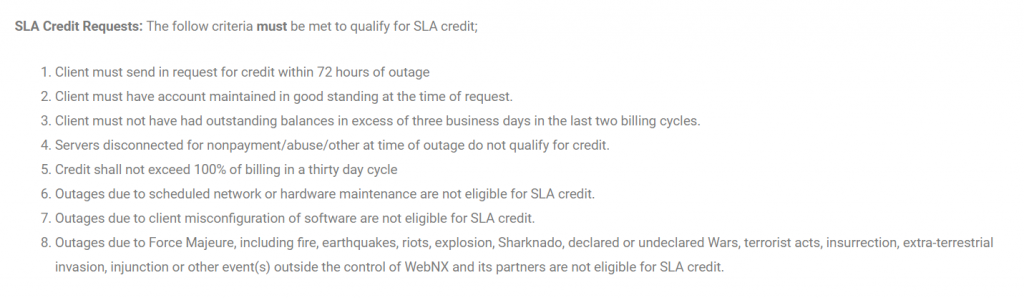

As the company was quick to point out, while their Service Agreement guarantees 100% uptime, it also limits the extent of the compensation –

I’m not a lawyer, but seems to me a fire falls under the Force Majeure article there. And even if you were to claim that the company was at fault for using water sprinklers in a datacenter, the agreement limits the amount of compensation to one month’s worth of hosting.

Which makes sense, I guess. No hosting company can pay the extent of financial loss its clients suffer from an outage like this one. If they had to insure against that, we’d all be paying the additional costs in increased hosting fees.

Once we were done moving to the new server and the proverbial dust settled on our end, I cancelled the WebNX server and asked for a refund on that month.

Did I get it? Well, isn’t that a fantastic question.

You see, I never checked. WebNX replied to say that the server has been canceled and that they will issue the refund. And I just forgot all about it and moved on, assuming they will.

Writing this post just now, I decided to actually check to make sure. Not only did they not issue the refund, but they also billed us one more time… You see, I contacted them on the payment day, and as far as I can tell, the Paypal subscription was canceled right after that last payment.

I contacted WebNX again, telling them they accidentally charged us one more time, and that we never got that refund. These guys have a good reputation, so I hope this was just an honest mistake that will soon be corrected.

How to prevent this from happening again

I had a long chat with Scott from AdminGeekz on how we can prevent something like that from happening again. The only foolproof method would be to have a mirror site in place. That’s a plausible setup, used by many large sites.

If we have a mirror site, then if one server goes AWOL, traffic can immediately be directed to the working server. Zero downtime.

The problem with a mirror server is that it costs money. In our case, probably another $300 a month.

Is it worth it? Should we pay $3,600 a year for a solution that will prevent a loss of $2,000 once a decade? Probably not.

If and when we get to the point where the sites on a particular server generate $5K a day, then yes, we’ll have to seriously consider this option. Can’t wait for that to happen!

Lessons Learned

I waited for a couple of weeks before posting about this. I wanted to give myself some time to think about everything, talk to Scott and make sure everything was in working order again.

Here are my lessons so far. Maybe they can help someone else in dealing with a similar situation.

Anything can happen – keep your cool

Being your own boss has its perks but it doesn’t mean your life becomes stress-free.

You never know what might be waiting in your inbox in the morning –

- Your site was hacked.

- A copyright troll is demanding money.

- An angry visitor threatens to sue you.

- There was a fire in the datacenter and your server is out.

Anything can happen. All of the above (and much more!) has happened to me. Sometimes more than once.

You have to accept that and keep your cool. And then deal with the crisis in the best way you can.

Backups are crucial

Always have a current backup. Always. It doesn’t have to cost you money – if your site isn’t too big. See my post here about how we backup our niche sites.

A good server admin is worth their weight in gold

Yes, some hosts have great support. That’s important too. But I like having a good server admin on my side as well. When the crisis happens at the host level, they may not be able to deal with your particular site as fast as you’d want them to.

With a separate server admin service, we were able to move the site quickly and efficiently to a different hosting service.

Don’t keep all your eggs in one basket

Had this been our only site, this entire episode would have been a thousand times more stressful.

Since we have a diverse portfolio of 15 websites, I could keep myself and our team busy working on the other sites. I also knew the other sites kept generating revenue, mitigating some of the losses.

Blog about things

Thanks to this blog post I’m writing now, I actually circled back to the refund request and discovered that we didn’t get it! See, blogging about your work can really pay off 😉

I hope that blogging about this would help others too. As always, leave me a comment and let me know if you found this post helpful. And if you have your own outage stories to share, I’d love to hear about them and learn from others who have coped with similar situations.

This sounds like my worst nightmare!! Glad everything is running again! Sounds like a pain and just goes to show that anything can happen in this business.

Now excuse me while I go make a few extra backups for my sites haha.

Wow Anne, that must have been a bad four days. I’ve never suffered a site being down longer than an hour or so but I’ve had a big site deindexed from Google as a result of a malware attack. That lasted several weeks and initially I had no idea what the problem was so wasn’t sure I could fix it. Thanks for the detailed write-up. I agree that the cost of a mirror site is probably not worth it given the rarity of this event. I wonder, though, if the site made $5,000 per day whether a mirror site’s cost wouldn’t go up that much making it worth it.

Thanks, Anne – Quick Question do you have all your sites with the same host or have you spread that out also.

God Bless Greg

Eeek happy it ended well but how stressful.

I had an amazon affiliate site that ended up being down for three days a few years ago thanks to shocking bluehost support combined with my daughter suddenly being in hospital so we didnt have the mental capability to work on moving the site elsewhere at that time. It actually never recoved in google – tons of money lost there so it has me extra on edge over outages.

One of our servers got hacked twice this month… All good now (I hope) but it’s just so stressful especially as i was travelling one of the times. This side of running a digital media business is definitely the side I hate most.

Thanks for sharing the experience. I”m glad you are able to recover, thanks to the safety measures you have implemented over the years. There’s no such thing as overly cautious then.

This is a fantastic read, that no other web publisher (as far as I know) dares to talk about! Very delighting, and hoping that it never happens to me!

Thanks Anne,

how do you determine if a topic is underserved?

best regards from Germany

Dan

Hi Dan,

In a nutshell, check the top results on Google and see if they answer the question well enough. Do you think you could do better, and improve the answer in a significant way? If so, then the topic is probably under-served.

Of course, there’s the question of the search volume, but that’s the other aspect you need to be looking into.

I hope that helps.